Docker is a tool allowing administrators the ability to easily deploy applications and services. Used correctly, it can be a very powerful in allowing known configurations to be replicated and deployed quickly and easily in a consistent manner. Containers have a known state on initialization, and (generally) run a single service at a time, making the services they encapsulate easier to understand and compose in complex systems.

That said, there are a number of mistakes that people make in their Docker journey which can cause a detrimental security impact. Below, we will highlight some of these and advise you on how to secure your docker journey, whether self-host or in an enterprise.

Using Docker Rootless

Where available, it is important to make use of Docker Rootless images as this can help to mitigate the risk of container-breakout vulnerabilities. If the container does breakout, then the account is unprivileged and the damage can be limited. Containers typically run via a user with root privileges, and that user is the same as root on the host machine, therefore if there was a container breakout then this can compromise the host in a variety of ways, including (but not limited to):

- Accessing the filesystem of the host machine

- Accessing secrets and sensitive environment variables

- Escalation of privileges

- Access to resources on internal or firewalled network(s).

Good news though! Within a Dockerfile or when running our containers, we can specific the user with which to run commands and services within our container. Tools can also be used (such as s6-overlay) to run daemons as non-privileged users.

Docker Networks

Proper isolation of your docker services is a requirement for ensuring that the attack surface is reduced. As we know, it is important to be careful when opening holes in our networks, but oftentimes Docker configurations are overlooked. One misconfiguration can end with system compromise.

When securing Docker services, it is important to note that traditional methods to secure (IP tables etc) can fail silently on Docker hosts. By default, Docker will bypass UFW/iptables rules, rendering your firewall rules useless.Be sure to configure your UFW rules for your Docker services, and test the configuration extensively.

Secondly, binding ports to local IP addresses may only offer limited protection in certain conditions. There is a proposed fix for this in v28.0.0 but it is worth pointing out. Details for this can be seen here: https://github.com/moby/moby/issues/45610

In short, there is an issue whereby hosts on the same network as your docker host can access private subnets of your docker containers by default. Binding to local IPs is still a good practice and has a meaningful impact in managed cloud environments and specially configured networks.

My recommendations

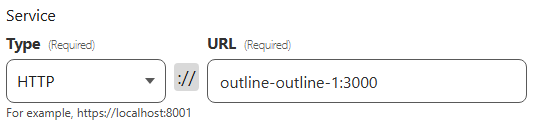

- Don’t publish ANY ports. When using a named network in bridge mode, it is possible for containers to have unfiltered access to each other, as they behave as though they are behind a local NAT gateway. If the dockerfile exposes ports by default, a reverse proxy may be able to connect directly to the container for external access, like so:

- Using Docker Networks. Docker Networks can be used to isolate and control which containers can talk to each other. This can help us to segregate Docker services from one another.

Using the latest tag

We are all aware of the importance of making sure that our applications and services (and their dependencies) are up-to-date to ensure that identified vulnerabilities are patched. That said, blindly relying on the :latest tag can lead to breaking changes or vulnerabilities creeping in unnoticed.

My recommendations

Version pinning is your friend here. You can also get granular with your version pinning to ensure that you’re pinning critical services more strictly than non-critical services.

# # Exact version pinning, best for critical services

image: postgres:17.2.1

# Patch version pinning, good for non-critical services

image: postgres:17.2

# Major version pinning, perfect for development services, UAT or quick-release rings.

image: postgres:17You can also use services such as Dependabot to automate version updates and ensure that changes are reviewed before release to production environments. (Make sure that you follow procedures for isolated staging of ANY updates!)

Access Management

Access control is a critical part of securing your Docker estate. This includes limiting container capabilities and permissions, as well as securing access to the underlying host.

Limiting container capabilities

One solid access control practice is to limit the capabilities of your containers. This can prevent an array of threats from data exfiltration, privilege escalation. traffic sniffing, and more. The Linux kernel can break down the privileges into named permissions or abilities called capabilities. For example, the CAP_CHOWN capability is what allows the changing of file ownership.

I would advise dropping capabilities and configuring “No new privileges” (security_opt: [ no-new-privileges=true ]) to prevent the container from gaining new privileges. The privileges can then be assigned to your container as required:

cap_add:

- CHOWN

- DAC_READ_SEARCH

- FOWNER

- SETGID

- SETUIDYou can read the capabilities (and other details) here: https://docs.docker.com/engine/containers/run/#runtime-privilege-and-linux-capabilities

Secrets management

Secret management within Docker is important to ensure that we are securely passing configuration information or sensitive information that might expose sensitive information of our app or service. When we are managing secrets; there are a couple of rules to stick to, but the most important of all is to never hard code secrets into your docker images and to ensure that secrets are not committed to git.

Securing docker secrets is a hotly contested topic, with many different options available (Azure Keyvault, .env files, 1Password, Bitwarden, Keeper or AWS Secrets Manager to name just a few!) Below are my suggestions, however it is important to pick a solution that works best for you.

Use Canary Tokens

Canary Tokens are a great way to detect if your secrets have been compromised and used. These can be added to sensitive files and tokens as a warning. Put them in every .env file, continuous integration platform or secrets manager that you use.

Generate strong secrets

A secret should be treated similar to a password. Generate a secure secret and store it securely. This secret - as the name implies - is a secret. DO NOT share it with anyone. The below script will generate a secure secret which can be used in your Docker containers:

import os

import hashlib

import base64

def generate_secret(secret_length=128):

# Generate a random secret of the specified length

secret = os.urandom(secret_length)

# Hash the secret using SHA-256

hashed_secret = hashlib.sha256(secret).digest()

# Encode the hashed secret in base64

encoded_secret = base64.b64encode(hashed_secret).decode('utf-8')

return encoded_secret

# Generate and print the secret with a length of 64 bytes

secret = generate_secret(secret_length=64)

print(f"Generated Secret: {secret}")

An example outcome for this would be: Generated Secret: 0B6RPmf+JzK9TeDk9jdEjkjVmgX6Jodn19fXcVpA+FwFhFf0I1Qbgy1dYB97xpi8

Securely storing secrets

Docker secrets should never be placed into your docker-compose.yml files in plaintext. Instead leverage tools such as a secrets manager or Docker Secrets. These are then encrypted and can be securely mounted into your containers filesystem for referencing. There are many guides online to assist with this if you need help. My personal preference is to leverage tools such as https://github.com/Infisical/infisical or enterprise secrets management.

Monitoring

Once your Docker environments have been configured, we then need to ensure that we are monitoring them. This includes monitoring for filesystem access, excessive load (which may be indicative of a wider problem), user or permission changes, etc.

Monitoring can be one of the most important steps of securing your stack, but is often the most commonly overlooked. You could think you have the best firewall, the best network, be operating the best practices; but if you don’t verify then how can you ever trust your configurations. Verify explicitly, verify everything.

Check your ports

You will need to ensure that you’re opening only the ports which are relevant for the operation of your service(s), and protect these with access control lists. There are a couple of ways to check your ports; we can check the network with tools like nmap, or we can query the operating system with tools such as netstat.

Testing Outside Your Network

You’ll need your current public IP, which you can find with services like ifconfig.me: curl https://ifconfig.me. Once you have your public IP, you now need to connect to an external network. You can use a hotspot or a dedicated server IP for this. You could also

# Scan specific ports:

nmap -A -p 80,443 --open --reason <your_publicIP>

# Top 100 ports:

nmap -A --top-ports 100 --open --reason <your_publicIP>

# All ports

nmap -A -p1-65535 --open --reason <your_publicIP>

Example output:

Starting Nmap 7.91 ( https://nmap.org ) at 2025-01-19

Nmap scan report for <your_publicIP>

Host is up (0.00033s latency).

PORT STATE SERVICE REASON

80/tcp open http syn-ack

443/tcp open https syn-ack

OS detection performed. Please report any incorrect results at https://nmap.org/submit/

Aggressive OS guesses: Linux 3.10 - 4.10, Ubuntu 16.04 or 18.04 (Linux 4.15), Linux 4.4 (X86_64)

Running: Linux 3.X, 4.X

Nmap done: 1 IP address (1 host up) scanned in 4.25 secondsTest Inside Your Network

Getting familiar with nmap is a no-brainer. I strongly recommend that you scan your local network and see what is going on. Scan your IoT devices, your TVs, your servers, your computers, your printer. You might actually be amazed (and terrified!) at what you find.

# Scan your localhost for all open ports

nmap -sT localhostFind details about services on Docker network 172.18.0.1/16

nmap -sn 172.18.0.1/16View Open Ports

Next, it’s worth getting familiar with tools like lsof which can show you granular network and disk activity. For example:

# Monitor specific port

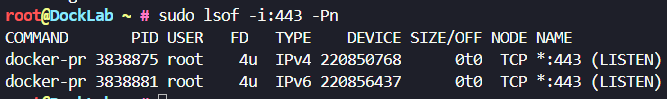

sudo lsof -i:443 -PnExample output:

Lets break down what this is telling us:

docker-pris the name of the process that is listening on port 443. This indicates that the process is related to Docker and likely involves a Docker container, or some Docker related network service.3838875and3838881are the process IDs of two separate instances of thedocker-prprocess. It’s possible that this process has multiple threads or separate processes.- The processes are running as

root, which typically means the process has elevated privileges, this could be a potential risk that we have just identified. 4urefers to the file descriptor for the connection. The "4" indicates the file descriptor number, and the "u" means the socket is open for both reading and writing.IPv4andIPv6indicate the versions of the IP protocol used for the connection.- The

DEVICEcolumn shows an internal identifier for the device where the socket is located (likely a network interface). 0t0means no specific size or offset is associated with the socket.- The

NODEcolumn refers to an internal identifier for the socket (not always meaningful to end-users). TCP *:443 (LISTEN)indicates that the process is listening for TCP connections.

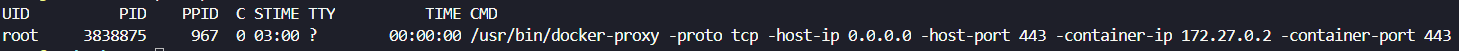

We can then run a command such as ps -fp 3838875 to see what the process is:

Here we can see that this is the Docker Proxy which has started this connection. I can then do a docker ps command to see what might be listening on :443

Here, we can see that the interface is opened by Kasm. This could present a risk as we have identified that this is also running as root, and so we may wish to go and ensure that this is locked down.

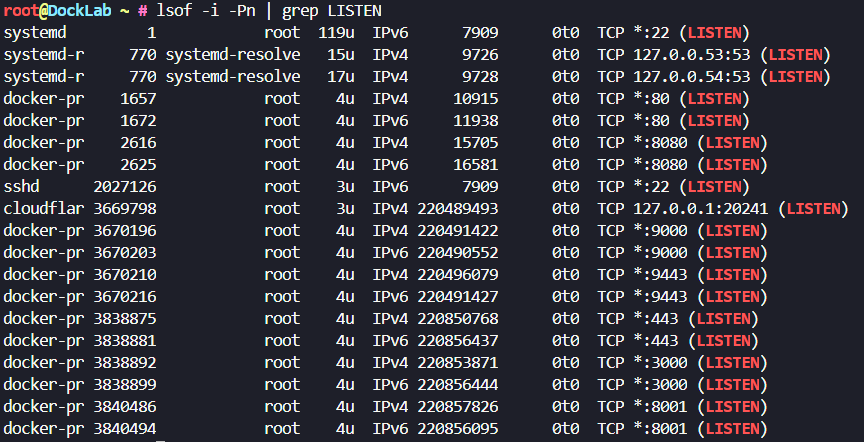

We can also look for all ports that are listening by using lsof -i -Pn | grep LISTEN to get an output that might look like so:

For my own requirements, I actually used this when validating whether my Linux servers were listening for CUPS: sudo lsof -i :631 | grep LISTEN which output no results, so I knew that CUPS was not an issue for me.

File monitoring

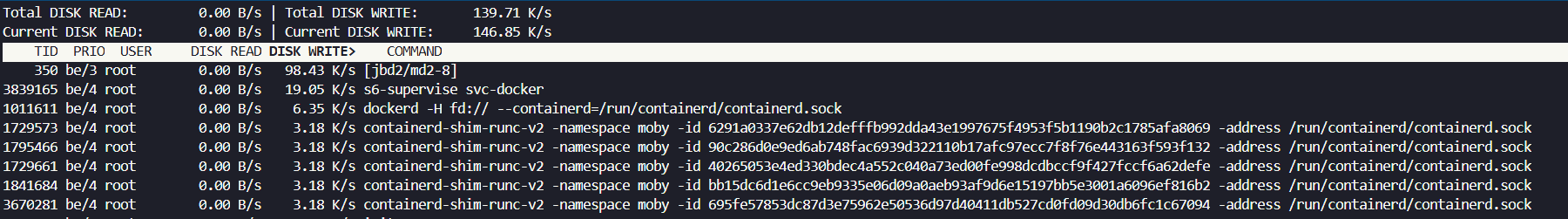

To identify which processes are using the most hard drive bandwidth, you can use iotop - You may need to install this with sudo apt install iotop and then run the command with sudo iotop

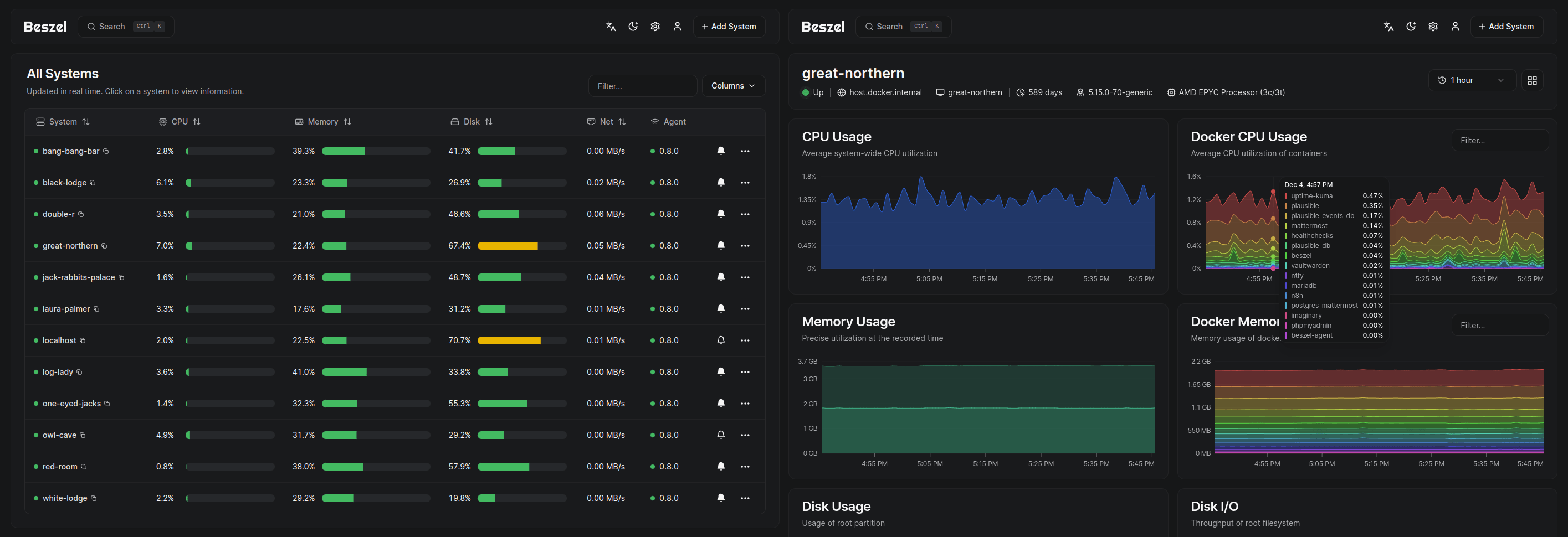

Docker Monitoring made easy

As well as the tools listed above, it is also possible to leverage monitoring solutions such as Netdata, LogicMonitor, Beszel (and others!) which can provide granular data in an easy to read dashboard. Below is an example of docker monitoring from Beszel, a free and open source solution for Docker monitoring.

Checklist

When configuring your Docker containers, following the practises above can help you to seriously harden your services. Be sure to follow a production-ready checklist (such as below) before you deploy publicly.

- Secrets - Are all secrets randomly generated and stored securely? Have you ensured that any git commits are excluding your secrets?

- Updates - Do you have a strategy for updating your containers? Are services pinned to versions? Have you risk-assessed the use of

:latesttags? Are updates automated, and who gets alerts when they fire? - Network - Are you ensuring that you have only exposed the necessary ports? Are your containers separated by logical Docker networks?

- Firewall - Does your host follow a default Deny rule? Explicitly allow only what is needed.

- Reverse Proxy - Reverse proxies such as Nginx, Traefik, Caddy or Cloudflare Tunnels can add a layer of authentication to your services.

- Canary Tokens - Place canary tokens alongside your secrets and ensure that you get alerted for these.

- Monitoring - Monitor your systems for unusual activity. Ensure that you have monitored your current state and verified that the configuration you desire is working as intended.

- Backup - Ensure that you have a backup solution for your Docker containers. Hint, I have a guide for that here: https://marshsecurity.org/backing-up-docker-volumes-with-ease/

- Least-Privilege Access - Are you using non-root (or rootless) containers where possible? Are your containers read-only where it is viable to do so?