We all know that it is important to ensure that our services and systems are regularly backed up. Recently, I have had a number of people asking about backups for Docker volumes - particularly with reference to Homebox (https://homebox.software/) which I help to maintain.

Backing up the containers

Backing up the docker volumes

For my requirements, I need to backup a large number of docker volumes, as I run multiple services from my host. To manage this, I split my docker paths by service: /opt/docker/{service} for example, I might have my Docker installation for Homebox running under /opt/docker/Homebox

Now, at my root Docker, I will create a new scripts repository which will handle my backup script:

mkdir ~/opt/docker/scripts

cd ~/opt/docker/scriptsWe will also create a new logs folder, to store any errors: mkdir logs and we will also create our backup repository to store the files:

mkdir ~/backups/dockerOnce completed, we can then create our new backup script:

nano docker-backup.sh

#!/bin/bash

# Directories

DOCKER_DIR=~/docker

BACKUP_ROOT=~/backups/docker

LOG_DIR="$DOCKER_DIR/scripts/logs"

ERROR_LOG="$LOG_DIR/backup-errors.txt"

# Retention period for backups (in days)

RETENTION_DAYS=5

# Ensure log directory exists

mkdir -p "$LOG_DIR"

# Start with a clean error log

: > "$ERROR_LOG"

# Function to log errors

log_error() {

echo "$(date): $1" >> "$ERROR_LOG"

}

# Function to clean up old backups

cleanup_old_backups() {

local backup_dir=$1

echo "Cleaning up old backups in: $backup_dir"

find "$backup_dir" -type f -name "*.tar.gz" -mtime +$RETENTION_DAYS -exec rm -v {} \; || {

log_error "Failed to clean up old backups in: $backup_dir"

}

}

# Iterate through each subfolder in the docker directory

for CONTAINER_DIR in "$DOCKER_DIR"/*; do

# Ensure it's a directory

if [[ -d "$CONTAINER_DIR" ]]; then

CONTAINER_NAME=$(basename "$CONTAINER_DIR")

BACKUP_DIR="$BACKUP_ROOT/$CONTAINER_NAME"

TIMESTAMP=$(date +"%Y%m%d_%H%M%S")

BACKUP_FILE="$BACKUP_DIR/${CONTAINER_NAME}_backup_$TIMESTAMP.tar.gz"

echo "Processing container: $CONTAINER_NAME"

# Ensure backup directory exists

mkdir -p "$BACKUP_DIR"

# Clean up old backups for this container

cleanup_old_backups "$BACKUP_DIR"

# Stop the container

echo "Stopping container: $CONTAINER_NAME"

docker compose -f "$CONTAINER_DIR/docker-compose.yml" down || {

log_error "Failed to stop container: $CONTAINER_NAME"

continue

}

# Backup the container directory and volumes

echo "Backing up container: $CONTAINER_NAME"

tar -czf "$BACKUP_FILE" "$CONTAINER_DIR" || {

log_error "Failed to back up container: $CONTAINER_NAME"

docker compose -f "$CONTAINER_DIR/docker-compose.yml" up -d || log_error "Failed to restart container: $CONTAINER_NAME"

continue

}

echo "Backup completed: $BACKUP_FILE"

# Restart the container

echo "Restarting container: $CONTAINER_NAME"

docker compose -f "$CONTAINER_DIR/docker-compose.yml" up -d || {

log_error "Failed to restart container: $CONTAINER_NAME"

continue

}

echo "Container restarted: $CONTAINER_NAME"

fi

done

# Final message

echo "Backup process completed. Check $ERROR_LOG for any errors."

Notes:

- This will stop each container, backup the content, and then restart the container. (This is advised for transactional databases)

- This script will keep 5 days worth of backups and delete anything older.

- Errors are logged to

~/docker/scripts/backup-errors.txt- It is up to you if you wish to clear this prior to the backups.

Now, we need to make our script executable:

chmod +x ~/opt/docker/scripts/docker-backup.sh and run it with the following command: ~/opt/docker/scripts/docker-backup.sh

Running the command, I can see that this has successfully backed up my containers. (Note that this may take a short while to run if you have a number of containers).

Testing the backups

Testing the backups

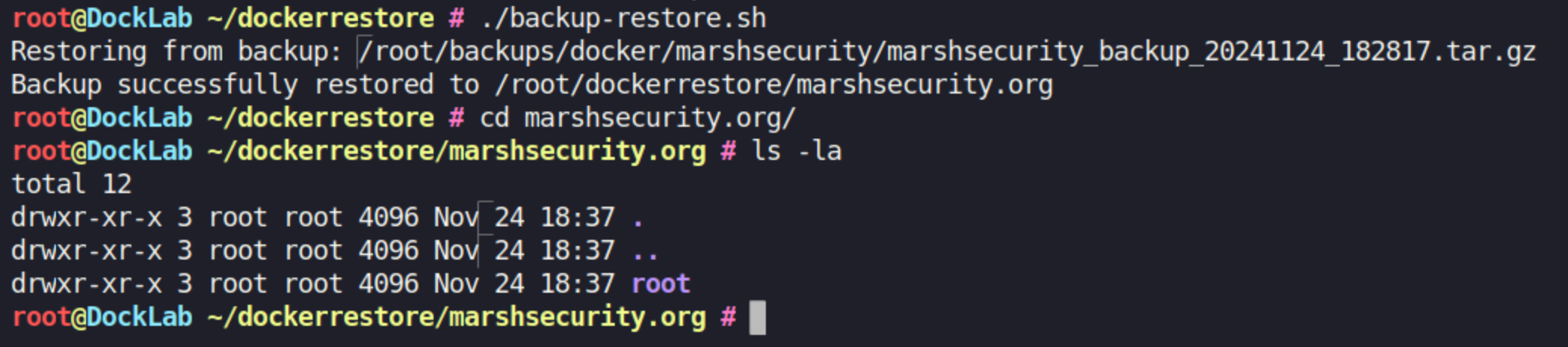

Now that we have our tar.gz files, let's actually test it to make sure that it is functioning as we'd expect. We'll take the backup for Marshsecurity.org and restore this to ~/dockerrestore/marshsecurity.org/ This can be simplified, but I have a script for testing my backups, so I will use this:

#!/bin/bash

# Backup and restore directories

BACKUP_DIR=~/backups/docker/marshsecurity

RESTORE_DIR=~/dockerrestore/marshsecurity.org

# Ensure the restore directory exists

mkdir -p "$RESTORE_DIR"

# Find the latest backup file

LATEST_BACKUP=$(find "$BACKUP_DIR" -type f -name "*.tar.gz" -printf "%T@ %p\n" | sort -nr | head -n 1 | awk '{print $2}')

if [[ -z "$LATEST_BACKUP" ]]; then

echo "No backup found in $BACKUP_DIR"

exit 1

fi

echo "Restoring from backup: $LATEST_BACKUP"

# Extract the backup into the restore directory

tar -xzf "$LATEST_BACKUP" -C "$RESTORE_DIR" || {

echo "Failed to restore backup from $LATEST_BACKUP"

exit 1

}

echo "Backup successfully restored to $RESTORE_DIR"

Running this, I can see that the files were successfully extracted:

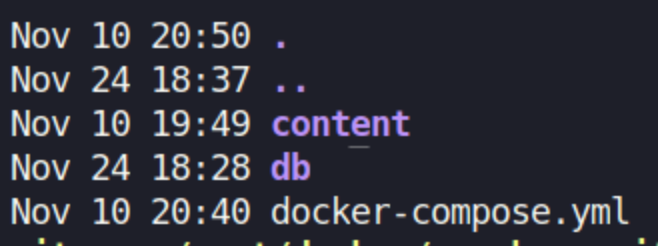

Now let's dive into root and confirm that this contains everything that we expect.

As you can see, this contains the backup of content and db, as well as a point-in-time snapshot of our docker-compose.yml file.

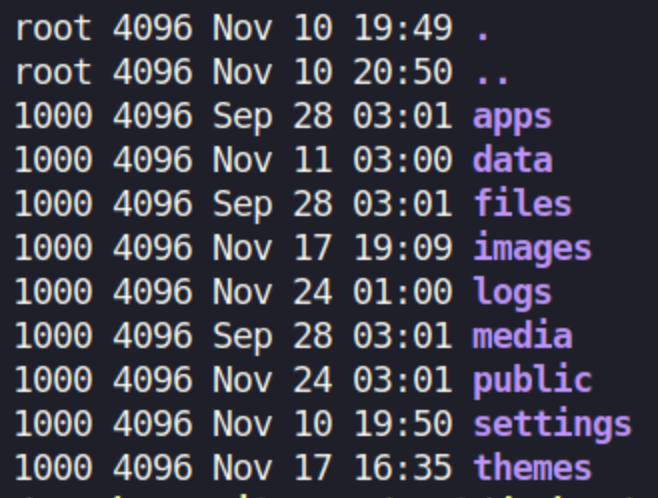

For verification, we can also see that our content folder is completely backed up:

Backup Storage

Offloading the backups

Now that we have the backups, we need to work out a way of offloading these to an offsite location for safekeeping. There are multiple ways to do this, including (but not limited to):

- Backblaze

- Cloudflare R2

- NAS

- Separate Server

- Storage Boxes

- External Media (USB, Portable Harddrive, etc)

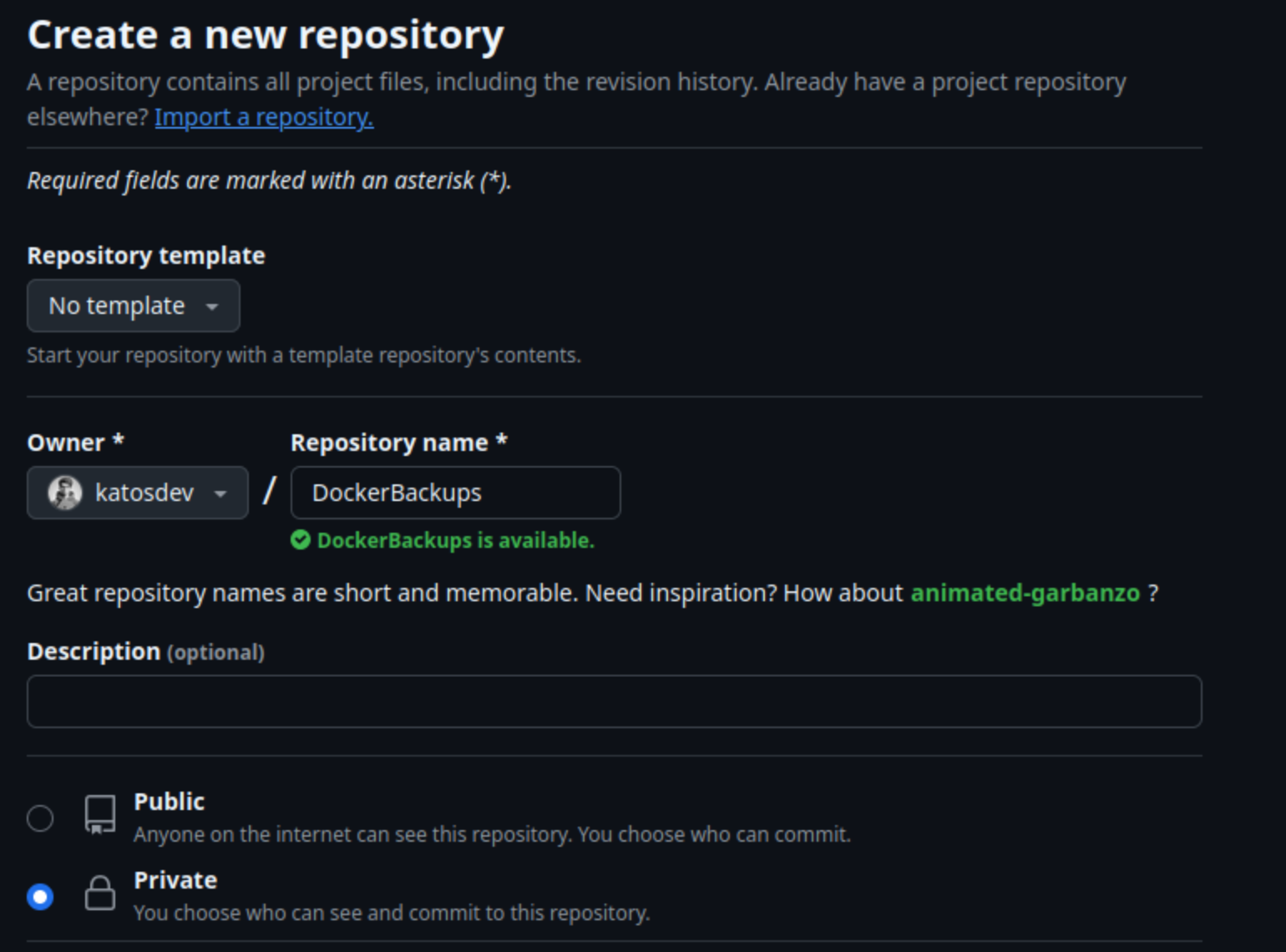

However, for the purposes of this blog, we will leverage a private repository on Github to backup our files. First, lets create a new private repository.

Next, we will need to create our SSH key for Github to use:

Generate the key using ssh-keygen -t ed25519 -C "[email protected]" When prompted for where you'd like to save the key, select ~/.ssh/github_backup and leave the passphrase as empty.

Next, add the SSH key to the agent using:

eval "$(ssh-agent -s)"

ssh-add ~/.ssh/github_backup

Some distros may have an issue with ~/ - In this instance, use /root/,ssh/github_backup instead.

Now that we have our keys, let's add the public key to Github. First, run cat ~/.ssh/github_backup.pub to obtain the public key. This should then be copied to your clipboard.

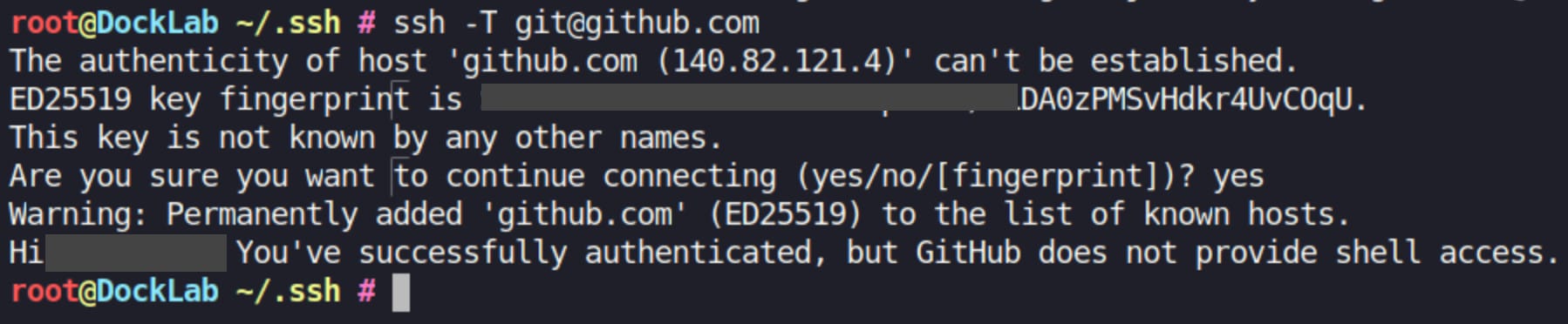

Next, navigate to: https://github.com/settings/keys and create a new SSH key. We will call this Github-Backup and enter the public key that we just created. We can test this by running ssh -T [email protected] from our server. You should see a result similar to below:

Now that this is done, we need to clone the backup repository. We will run: git clone [email protected]:YourUsername/YourBackupRepo.git ~/github-backups to do this.

Lastly, let's amend our backup script to make use of this repository and test:

#!/bin/bash

# Variables

DOCKER_DIR=~/opt/docker/

BACKUP_DIR=~/opt/backups/docker

EXCLUDED_CONTAINERS=("your excluded containers here") # All lowercase for case-insensitive comparison

LOG_FILE=~/opt/docker/scripts/logs/backup-errors.txt

GITHUB_REPO_DIR=~/github-backups

GITHUB_BRANCH="main"

# Ensure required directories exist

mkdir -p "$(dirname "$LOG_FILE")"

mkdir -p "$GITHUB_REPO_DIR"

# Function to check if a directory is empty

is_empty() {

[ -z "$(ls -A "$1" 2>/dev/null)" ]

}

# Function to convert a string to lowercase

to_lower() {

echo "$1" | tr '[:upper:]' '[:lower:]'

}

# Change to GitHub repository directory

cd "$GITHUB_REPO_DIR" || exit 1

# Ensure the GitHub repository is initialized

if [ ! -d ".git" ]; then

echo "Initializing GitHub repository..."

git init

git remote add origin [email protected]:yourusername/your-repo.git

fi

# Ensure we're on the correct branch

git checkout -B "$GITHUB_BRANCH"

# Loop through subdirectories in the Docker directory

for container_dir in "$DOCKER_DIR"/*; do

# Get the container name and convert to lowercase

container_name=$(basename "$container_dir")

container_name_lower=$(to_lower "$container_name")

# Skip excluded containers

if [[ " ${EXCLUDED_CONTAINERS[@]} " =~ " ${container_name_lower} " ]]; then

echo "Skipping $container_name (excluded)" >> "$LOG_FILE"

continue

fi

# Skip if the directory is empty

if is_empty "$container_dir"; then

echo "Skipping $container_name (empty directory)" >> "$LOG_FILE"

continue

fi

# Create a container-specific backup directory

backup_path="$BACKUP_DIR/$container_name"

mkdir -p "$backup_path"

echo "Backing up $container_name..." >> "$LOG_FILE"

# Stop the container

if ! docker compose -f "$container_dir/docker-compose.yml" down; then

echo "Failed to stop $container_name. Skipping backup." >> "$LOG_FILE"

continue

fi

# Backup the container's data, excluding the "logs" folder

tarball="${container_name}_$(date +%Y%m%d).tar.gz"

if ! tar --exclude="logs" -czf "$backup_path/$tarball" -C "$container_dir" .; then

echo "Failed to back up $container_name." >> "$LOG_FILE"

continue

fi

# Restart the container

if ! docker compose -f "$container_dir/docker-compose.yml" up -d; then

echo "Failed to restart $container_name." >> "$LOG_FILE"

fi

# Add backup to GitHub repository

dest_dir="$GITHUB_REPO_DIR/$(date +%Y/%m/%d)/$container_name"

mkdir -p "$dest_dir"

cp "$backup_path/$tarball" "$dest_dir"

cd "$GITHUB_REPO_DIR" || exit 1

git add "$(date +%Y/%m/%d)/$container_name"

git commit -m "Backup for $container_name on $(date +%Y-%m-%d)"

# Push to GitHub repository

if ! git push origin "$GITHUB_BRANCH"; then

echo "Failed to push $container_name to GitHub. Check permissions or repository limits." >> "$LOG_FILE"

continue

fi

done

# Delete backups older than 5 days

find "$BACKUP_DIR" -type f -name "*.tar.gz" -mtime +5 -exec rm -f {} \;

echo "Backup and upload process completed. Check $LOG_FILE for details."

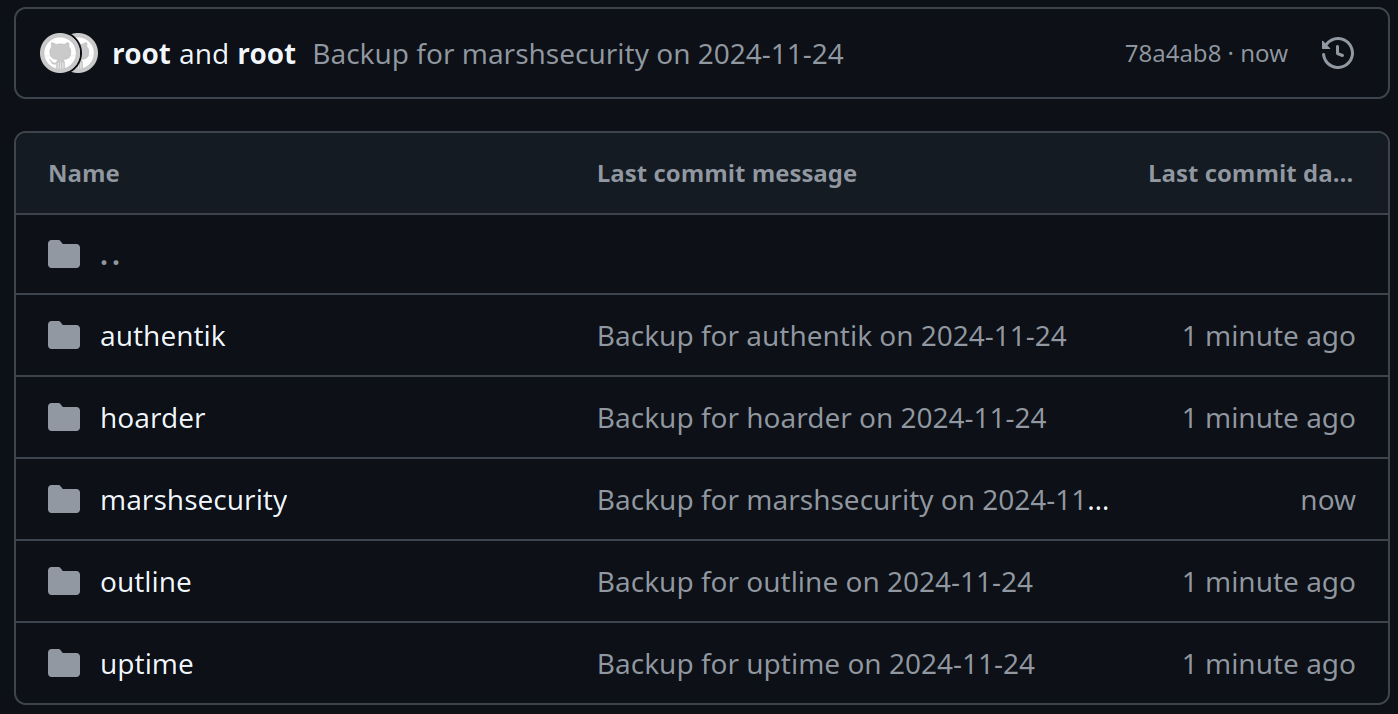

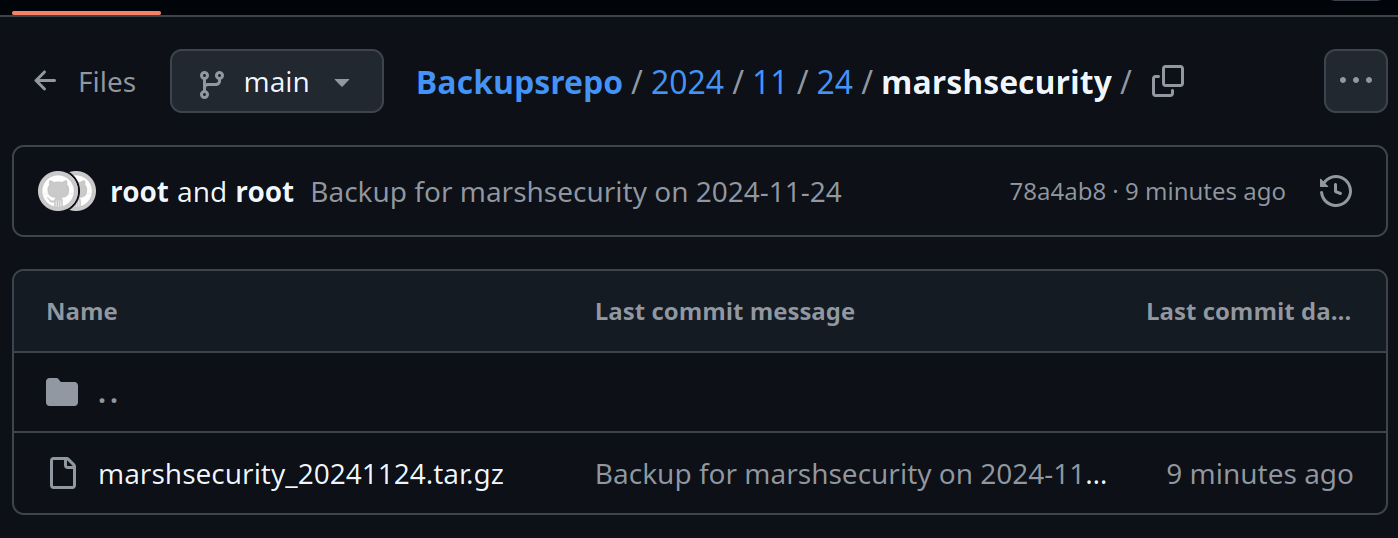

This works by creating folder structures as follows: Year - Month - Day - Service. For example: 2024/11/01/marshsecurity.org.

With a successful test, you should see that the files have been uploaded to Github, and can verify this in your repository:

Automation

Now that we have our script and we've confirmed that this is working to backup to Github, and that we can successfully verify the contents of the backup, we need to automate this to run on a schedule using a Crontask.

For this, run crontab -e to open crontab.

We will add this line:

0 0 * * * ~/opt/docker/scripts/docker-backup.shThis will run at midnight every night, you can adjust this to suit your needs: https://crontab-generator.org/

Note that your backups will be committed per container. This is to help us to stay under the 2GB Github commit size limitation.

And that's it! You now have your backups running automatically, and dumping to Github:

Happy contain(er)ing! 🙂